Hello, and welcome to the July 2025 ClickHouse newsletter!

This month, we have a blog exploring ClickHouse’s join performance vs Databricks and Snowflake, reflections on getting the ClickHouse Certified developer credential, scaling our observability platform beyond 100 Petabytes, using ClickHouse for real-time sports analytics, and more!

Featured community member: Amos Bird #

This month's featured community member is Amos Bird, Software Engineer at Tencent.

Amos Bird is the top ClickHouse contributor with more than 600 pull requests over 8 years. He implemented many features in ClickHouse, such as projections, common table expressions, column transformers, and many optimizations, such as a hash table with string keys segmented by size classes.

Amos Bird is actively involved in architecture discussions about ClickHouse. Every time Alexey travels to Beijing, he takes the opportunity to meet with Amos Bird and the local ClickHouse community!

Upcoming events #

Global events #

- v25.7 Community Call - July 24

Training #

- Migration to ClickHouse - Hong Kong - July 17

- ClickHouse Fundamentals - Virtual - July 30

- ClickHouse Deep Dive - Bogota, Colombia - August 5

- ClickHouse Deep Dive - Buenos Aires, Argentina - August 7

- ClickHouse Deep Dive - São Paulo, Brazil - August 12

- ClickHouse Deep Dive - Virtual - August 12

- ClickHouse Query Optimization - Virtual - August 27

Events in AMER #

- Bogota ClickHouse Meetup - August 5

- Buenos Aires ClickHouse Meetup - August 7

- AWS Summit Toronto - September 4

- AWS Summit Los Angeles - September 17

Events in EMEA #

- Tech BBQ Copenhagen - August 27-28

- AWS Summit Zurich - September 11

- HayaData Tel Aviv, September 16

- BigData London - September 24-25

- PyData Amsterdam - September 24-25

- AWS Cloud Day Riyadh, September 29

Events in APAC #

- Hong Kong ClickHouse Meetup - July 17

- DataEngBytes Melbourne - July 24-25

- DataEngBytes Sydney - July 29-30

- AWS Startup Dev Day Melbourne - August 1

- KubeCon + CloudNativeCon India - August 6

- AWS Summit Jakarta - August 7

- Philippines Data & AI Conference 2025 - August 14

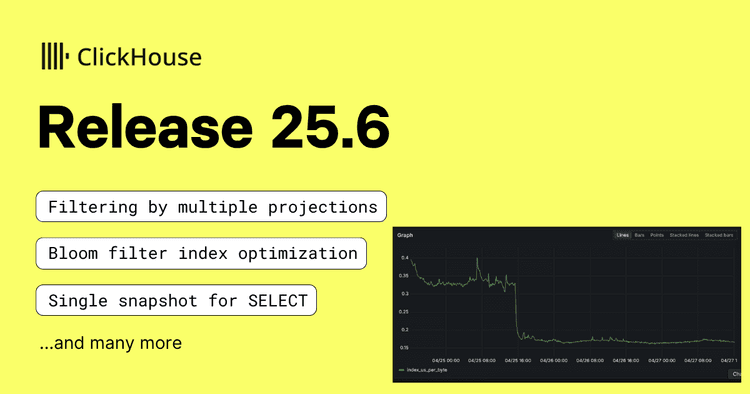

25.6 release #

ClickHouse 25.6 has some cool features, including consistent snapshots across complex queries, multiple projection filtering, and chdig (a built-in monitoring TUI with real-time flamegraphs).

The release also includes the Bloom filter optimization that saved OpenAI during the GPT-4o image generation launch, now available to the entire community.

Using ClickHouse Cloud for real-time sports analytics #

In his latest article, Benjamin Wootton demonstrates how you can use ClickHouse Cloud to power real-time sports analytics by processing player position data to create interactive visualizations of movement patterns, distance covered, and team dynamics on the football pitch.

The solution showcases ClickHouse's ability to handle high-frequency sensor data with complex spatial calculations while enabling coaches to gain immediate insights during matches, all with the flexibility to scale from zero during weekdays to handling thousands of concurrent users on game day.

Join me if you can: ClickHouse vs. Databricks & Snowflake - Part 1 #

My colleagues Al Brown and Tom Schreiber challenge the myth that "ClickHouse can't do joins" by running unmodified, join-heavy queries against a coffee-shop-themed benchmark used to compare Databricks and Snowflake.

Without any special tuning, ClickHouse consistently outperformed both competitors across all data scales (721M to 7.2B rows), completing most queries in under a second while being faster and more cost-effective.

These results stem from six months of targeted improvements to ClickHouse's join capabilities, significantly enhancing speed and scalability. In a follow-up blog post, Al and Tom show how to make things even faster using ClickHouse-specific optimizations.

Reflections on getting the ClickHouse Certified Developer credential #

Burak Uyar shares his journey to becoming a ClickHouse Certified Developer in a practical guide highlighting the exam's focus on hands-on SQL queries and real-world problem-solving rather than theoretical knowledge.

This blog provides useful preparation tips if you plan to take the certification soon. It emphasizes mastery of core topics like table engines, query optimization, and architecture through the official documentation and learning paths.

Analytics at scale: Our journey to ClickHouse #

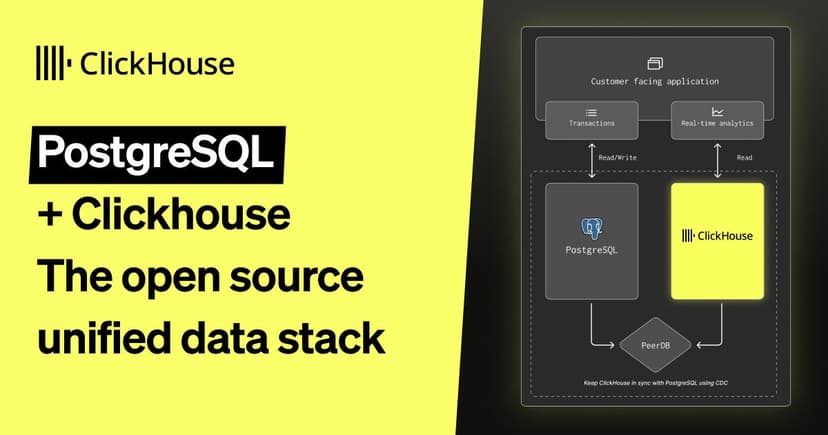

Didier Darricau shares how Partoo tackled the growing pains of their analytics product, where PostgreSQL queries were taking minutes to complete as their data grew to 800M records across 500GB, severely impacting client experiences and limiting their ability to onboard enterprise customers.

After comparing solutions against criteria including performance, editing capabilities, and AWS integration, they found ClickHouse outperformed AWS RedShift by 30% in volume testing and handled 10x more parallel queries, making it the best choice for their real-time analytics needs.

Their implementation journey offers valuable insights into the trade-offs between different ClickHouse data modification approaches. When faced with the challenge of updating existing records, Partoo evaluated ReplacingMergeTree and a custom solution, ultimately choosing the latter approach as it best fits their specific aggregation workload while achieving queries up to 50x faster than their previous implementation.

Scaling our Observability platform beyond 100 Petabytes by embracing wide events and replacing OTel #

Rory Crispin and Dale McDiarmid explain how our internal LogHouse observability platform scaled from 19PB to over 100PB of data by moving beyond OpenTelemetry's limitations with a purpose-built System Tables Exporter (SysEx).

This custom solution handles 20x more data with 90% less CPU than OpenTelemetry. It embraces a "store everything, aggregate nothing" philosophy that enables powerful fleet-wide analysis using ClickHouse's native capabilities.

Observability 2.0: Breaking the Three-Pillar Silos for Good #

Zakaria El Bazi proves to be a kindred spirit who shares our vision at ClickHouse. He examines the shift from traditional "three-pillar" observability to the unified "Observability 2.0" approach that stores all telemetry as rich contextual "wide events" in a columnar database.

Zakaria echoes ClickStack's core philosophy that unified observability eliminates tool fragmentation, dramatically reduces costs (potentially saving companies $450k/year), and enables natural data correlation, making troubleshooting faster and more effective in today's complex distributed systems.

When SIGTERM Does Nothing: A Postgres Mystery #

Kevin Biju Kizhake Kanichery’s deep dive into a mysterious PostgreSQL bug where logical replication slots became completely unkillable on read replicas was recently featured in Postgres Weekly.

The investigation identified a subtle issue in PostgreSQL's transaction handling that could threaten database stability. Our patch was accepted and backported to all supported PostgreSQL versions. This work demonstrates our ongoing engagement with the PostgreSQL community and complements our ClickPipes PostgreSQL CDC offering, which enables smooth, reliable data integration between PostgreSQL and ClickHouse.

Quick reads #

- Ashkan Golehpour shares advice for PostgreSQL developers transitioning to ClickHouse around how CTEs work differently in ClickHouse and can produce incorrect aggregation results.

- Sajjad Aghapour demonstrates how to enhance ClickHouse data security using the SQL security definer. This feature allows views to execute with the privileges of a predefined user rather than the querying user.

- Sanjeev Singh shows how to use projections to speed up queries against the UK price paid dataset.

- Yash Patel provides a practical guide to managing ClickHouse's system tables, which can silently grow to consume more storage than your primary data. He discusses multiple solutions, including using force_drop_table flags to bypass size limits when truncating oversized tables, applying Time-To-Live (TTL) settings to automatically purge older logs, reducing logging levels in configuration, and implementing regular monitoring.

Video corner #

- Mark Needham did a 3 minute introduction to observability and another ~5 minute introduction to ClickStack.

- And if you need more ClickStack, Mike Shi and Dale McDiarmid presented a webinar, Introducing ClickStack: The Future of Observability on ClickHouse.

- Dmitry Pavlov explains why Model Context Protocol completed changed his view about AI and led to him building a production grade AI agent.

- Robert Schulze dives into the internals of how the JSON data type was built to deliver high-speed analytical performance, without sacrificing flexibility.